新增open-webui(ollama-webui)相关文件

This commit is contained in:

BIN

Chinese-Llama-2-7b/20240112_main.zip

Normal file

BIN

Chinese-Llama-2-7b/20240112_main.zip

Normal file

Binary file not shown.

BIN

Docker-LLaMA2-Chat/20240112_main.zip

Normal file

BIN

Docker-LLaMA2-Chat/20240112_main.zip

Normal file

Binary file not shown.

BIN

ollama-webui/20240115_main.zip

Normal file

BIN

ollama-webui/20240115_main.zip

Normal file

Binary file not shown.

16

ollama-webui/ollama-webui-main/.dockerignore

Normal file

16

ollama-webui/ollama-webui-main/.dockerignore

Normal file

@@ -0,0 +1,16 @@

|

||||

.DS_Store

|

||||

node_modules

|

||||

/.svelte-kit

|

||||

/package

|

||||

.env

|

||||

.env.*

|

||||

!.env.example

|

||||

vite.config.js.timestamp-*

|

||||

vite.config.ts.timestamp-*

|

||||

__pycache__

|

||||

.env

|

||||

_old

|

||||

uploads

|

||||

.ipynb_checkpoints

|

||||

**/*.db

|

||||

_test

|

||||

13

ollama-webui/ollama-webui-main/.eslintignore

Normal file

13

ollama-webui/ollama-webui-main/.eslintignore

Normal file

@@ -0,0 +1,13 @@

|

||||

.DS_Store

|

||||

node_modules

|

||||

/build

|

||||

/.svelte-kit

|

||||

/package

|

||||

.env

|

||||

.env.*

|

||||

!.env.example

|

||||

|

||||

# Ignore files for PNPM, NPM and YARN

|

||||

pnpm-lock.yaml

|

||||

package-lock.json

|

||||

yarn.lock

|

||||

30

ollama-webui/ollama-webui-main/.eslintrc.cjs

Normal file

30

ollama-webui/ollama-webui-main/.eslintrc.cjs

Normal file

@@ -0,0 +1,30 @@

|

||||

module.exports = {

|

||||

root: true,

|

||||

extends: [

|

||||

'eslint:recommended',

|

||||

'plugin:@typescript-eslint/recommended',

|

||||

'plugin:svelte/recommended',

|

||||

'prettier'

|

||||

],

|

||||

parser: '@typescript-eslint/parser',

|

||||

plugins: ['@typescript-eslint'],

|

||||

parserOptions: {

|

||||

sourceType: 'module',

|

||||

ecmaVersion: 2020,

|

||||

extraFileExtensions: ['.svelte']

|

||||

},

|

||||

env: {

|

||||

browser: true,

|

||||

es2017: true,

|

||||

node: true

|

||||

},

|

||||

overrides: [

|

||||

{

|

||||

files: ['*.svelte'],

|

||||

parser: 'svelte-eslint-parser',

|

||||

parserOptions: {

|

||||

parser: '@typescript-eslint/parser'

|

||||

}

|

||||

}

|

||||

]

|

||||

};

|

||||

1

ollama-webui/ollama-webui-main/.github/FUNDING.yml

vendored

Normal file

1

ollama-webui/ollama-webui-main/.github/FUNDING.yml

vendored

Normal file

@@ -0,0 +1 @@

|

||||

github: tjbck

|

||||

60

ollama-webui/ollama-webui-main/.github/ISSUE_TEMPLATE/bug_report.md

vendored

Normal file

60

ollama-webui/ollama-webui-main/.github/ISSUE_TEMPLATE/bug_report.md

vendored

Normal file

@@ -0,0 +1,60 @@

|

||||

---

|

||||

name: Bug report

|

||||

about: Create a report to help us improve

|

||||

title: ''

|

||||

labels: ''

|

||||

assignees: ''

|

||||

---

|

||||

|

||||

# Bug Report

|

||||

|

||||

## Description

|

||||

|

||||

**Bug Summary:**

|

||||

[Provide a brief but clear summary of the bug]

|

||||

|

||||

**Steps to Reproduce:**

|

||||

[Outline the steps to reproduce the bug. Be as detailed as possible.]

|

||||

|

||||

**Expected Behavior:**

|

||||

[Describe what you expected to happen.]

|

||||

|

||||

**Actual Behavior:**

|

||||

[Describe what actually happened.]

|

||||

|

||||

## Environment

|

||||

|

||||

- **Operating System:** [e.g., Windows 10, macOS Big Sur, Ubuntu 20.04]

|

||||

- **Browser (if applicable):** [e.g., Chrome 100.0, Firefox 98.0]

|

||||

|

||||

## Reproduction Details

|

||||

|

||||

**Confirmation:**

|

||||

|

||||

- [ ] I have read and followed all the instructions provided in the README.md.

|

||||

- [ ] I have reviewed the troubleshooting.md document.

|

||||

- [ ] I have included the browser console logs.

|

||||

- [ ] I have included the Docker container logs.

|

||||

|

||||

## Logs and Screenshots

|

||||

|

||||

**Browser Console Logs:**

|

||||

[Include relevant browser console logs, if applicable]

|

||||

|

||||

**Docker Container Logs:**

|

||||

[Include relevant Docker container logs, if applicable]

|

||||

|

||||

**Screenshots (if applicable):**

|

||||

[Attach any relevant screenshots to help illustrate the issue]

|

||||

|

||||

## Installation Method

|

||||

|

||||

[Describe the method you used to install the project, e.g., manual installation, Docker, package manager, etc.]

|

||||

|

||||

## Additional Information

|

||||

|

||||

[Include any additional details that may help in understanding and reproducing the issue. This could include specific configurations, error messages, or anything else relevant to the bug.]

|

||||

|

||||

## Note

|

||||

|

||||

If the bug report is incomplete or does not follow the provided instructions, it may not be addressed. Please ensure that you have followed the steps outlined in the README.md and troubleshooting.md documents, and provide all necessary information for us to reproduce and address the issue. Thank you!

|

||||

19

ollama-webui/ollama-webui-main/.github/ISSUE_TEMPLATE/feature_request.md

vendored

Normal file

19

ollama-webui/ollama-webui-main/.github/ISSUE_TEMPLATE/feature_request.md

vendored

Normal file

@@ -0,0 +1,19 @@

|

||||

---

|

||||

name: Feature request

|

||||

about: Suggest an idea for this project

|

||||

title: ''

|

||||

labels: ''

|

||||

assignees: ''

|

||||

---

|

||||

|

||||

**Is your feature request related to a problem? Please describe.**

|

||||

A clear and concise description of what the problem is. Ex. I'm always frustrated when [...]

|

||||

|

||||

**Describe the solution you'd like**

|

||||

A clear and concise description of what you want to happen.

|

||||

|

||||

**Describe alternatives you've considered**

|

||||

A clear and concise description of any alternative solutions or features you've considered.

|

||||

|

||||

**Additional context**

|

||||

Add any other context or screenshots about the feature request here.

|

||||

59

ollama-webui/ollama-webui-main/.github/workflows/docker-build.yaml

vendored

Normal file

59

ollama-webui/ollama-webui-main/.github/workflows/docker-build.yaml

vendored

Normal file

@@ -0,0 +1,59 @@

|

||||

#

|

||||

name: Create and publish a Docker image

|

||||

|

||||

# Configures this workflow to run every time a change is pushed to the branch called `release`.

|

||||

on:

|

||||

push:

|

||||

branches:

|

||||

- main

|

||||

- dev

|

||||

tags:

|

||||

- v*

|

||||

|

||||

# Defines two custom environment variables for the workflow. These are used for the Container registry domain, and a name for the Docker image that this workflow builds.

|

||||

env:

|

||||

REGISTRY: ghcr.io

|

||||

IMAGE_NAME: ${{ github.repository }}

|

||||

|

||||

# There is a single job in this workflow. It's configured to run on the latest available version of Ubuntu.

|

||||

jobs:

|

||||

build-and-push-image:

|

||||

runs-on: ubuntu-latest

|

||||

# Sets the permissions granted to the `GITHUB_TOKEN` for the actions in this job.

|

||||

permissions:

|

||||

contents: read

|

||||

packages: write

|

||||

#

|

||||

steps:

|

||||

- name: Checkout repository

|

||||

uses: actions/checkout@v4

|

||||

# Required for multi architecture build

|

||||

- name: Set up QEMU

|

||||

uses: docker/setup-qemu-action@v3

|

||||

# Required for multi architecture build

|

||||

- name: Set up Docker Buildx

|

||||

uses: docker/setup-buildx-action@v3

|

||||

# Uses the `docker/login-action` action to log in to the Container registry registry using the account and password that will publish the packages. Once published, the packages are scoped to the account defined here.

|

||||

- name: Log in to the Container registry

|

||||

uses: docker/login-action@v3

|

||||

with:

|

||||

registry: ${{ env.REGISTRY }}

|

||||

username: ${{ github.actor }}

|

||||

password: ${{ secrets.GITHUB_TOKEN }}

|

||||

# This step uses [docker/metadata-action](https://github.com/docker/metadata-action#about) to extract tags and labels that will be applied to the specified image. The `id` "meta" allows the output of this step to be referenced in a subsequent step. The `images` value provides the base name for the tags and labels.

|

||||

- name: Extract metadata (tags, labels) for Docker

|

||||

id: meta

|

||||

uses: docker/metadata-action@v5

|

||||

with:

|

||||

images: ${{ env.REGISTRY }}/${{ env.IMAGE_NAME }}

|

||||

# This step uses the `docker/build-push-action` action to build the image, based on your repository's `Dockerfile`. If the build succeeds, it pushes the image to GitHub Packages.

|

||||

# It uses the `context` parameter to define the build's context as the set of files located in the specified path. For more information, see "[Usage](https://github.com/docker/build-push-action#usage)" in the README of the `docker/build-push-action` repository.

|

||||

# It uses the `tags` and `labels` parameters to tag and label the image with the output from the "meta" step.

|

||||

- name: Build and push Docker image

|

||||

uses: docker/build-push-action@v5

|

||||

with:

|

||||

context: .

|

||||

push: true

|

||||

platforms: linux/amd64,linux/arm64

|

||||

tags: ${{ steps.meta.outputs.tags }}

|

||||

labels: ${{ steps.meta.outputs.labels }}

|

||||

27

ollama-webui/ollama-webui-main/.github/workflows/format-backend.yaml

vendored

Normal file

27

ollama-webui/ollama-webui-main/.github/workflows/format-backend.yaml

vendored

Normal file

@@ -0,0 +1,27 @@

|

||||

name: Python CI

|

||||

on:

|

||||

push:

|

||||

branches: ['main']

|

||||

pull_request:

|

||||

jobs:

|

||||

build:

|

||||

name: 'Format Backend'

|

||||

env:

|

||||

PUBLIC_API_BASE_URL: ''

|

||||

runs-on: ubuntu-latest

|

||||

strategy:

|

||||

matrix:

|

||||

node-version:

|

||||

- latest

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- name: Use Python

|

||||

uses: actions/setup-python@v4

|

||||

- name: Use Bun

|

||||

uses: oven-sh/setup-bun@v1

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

python -m pip install --upgrade pip

|

||||

pip install yapf

|

||||

- name: Format backend

|

||||

run: bun run format:backend

|

||||

22

ollama-webui/ollama-webui-main/.github/workflows/format-build-frontend.yaml

vendored

Normal file

22

ollama-webui/ollama-webui-main/.github/workflows/format-build-frontend.yaml

vendored

Normal file

@@ -0,0 +1,22 @@

|

||||

name: Bun CI

|

||||

on:

|

||||

push:

|

||||

branches: ['main']

|

||||

pull_request:

|

||||

jobs:

|

||||

build:

|

||||

name: 'Format & Build Frontend'

|

||||

env:

|

||||

PUBLIC_API_BASE_URL: ''

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- name: Use Bun

|

||||

uses: oven-sh/setup-bun@v1

|

||||

- run: bun --version

|

||||

- name: Install frontend dependencies

|

||||

run: bun install

|

||||

- name: Format frontend

|

||||

run: bun run format

|

||||

- name: Build frontend

|

||||

run: bun run build

|

||||

27

ollama-webui/ollama-webui-main/.github/workflows/lint-backend.disabled

vendored

Normal file

27

ollama-webui/ollama-webui-main/.github/workflows/lint-backend.disabled

vendored

Normal file

@@ -0,0 +1,27 @@

|

||||

name: Python CI

|

||||

on:

|

||||

push:

|

||||

branches: ['main']

|

||||

pull_request:

|

||||

jobs:

|

||||

build:

|

||||

name: 'Lint Backend'

|

||||

env:

|

||||

PUBLIC_API_BASE_URL: ''

|

||||

runs-on: ubuntu-latest

|

||||

strategy:

|

||||

matrix:

|

||||

node-version:

|

||||

- latest

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- name: Use Python

|

||||

uses: actions/setup-python@v4

|

||||

- name: Use Bun

|

||||

uses: oven-sh/setup-bun@v1

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

python -m pip install --upgrade pip

|

||||

pip install pylint

|

||||

- name: Lint backend

|

||||

run: bun run lint:backend

|

||||

21

ollama-webui/ollama-webui-main/.github/workflows/lint-frontend.disabled

vendored

Normal file

21

ollama-webui/ollama-webui-main/.github/workflows/lint-frontend.disabled

vendored

Normal file

@@ -0,0 +1,21 @@

|

||||

name: Bun CI

|

||||

on:

|

||||

push:

|

||||

branches: ['main']

|

||||

pull_request:

|

||||

jobs:

|

||||

build:

|

||||

name: 'Lint Frontend'

|

||||

env:

|

||||

PUBLIC_API_BASE_URL: ''

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- name: Use Bun

|

||||

uses: oven-sh/setup-bun@v1

|

||||

- run: bun --version

|

||||

- name: Install frontend dependencies

|

||||

run: bun install --frozen-lockfile

|

||||

- run: bun run lint:frontend

|

||||

- run: bun run lint:types

|

||||

if: success() || failure()

|

||||

300

ollama-webui/ollama-webui-main/.gitignore

vendored

Normal file

300

ollama-webui/ollama-webui-main/.gitignore

vendored

Normal file

@@ -0,0 +1,300 @@

|

||||

.DS_Store

|

||||

node_modules

|

||||

/build

|

||||

/.svelte-kit

|

||||

/package

|

||||

.env

|

||||

.env.*

|

||||

!.env.example

|

||||

vite.config.js.timestamp-*

|

||||

vite.config.ts.timestamp-*

|

||||

# Byte-compiled / optimized / DLL files

|

||||

__pycache__/

|

||||

*.py[cod]

|

||||

*$py.class

|

||||

|

||||

# C extensions

|

||||

*.so

|

||||

|

||||

# Distribution / packaging

|

||||

.Python

|

||||

build/

|

||||

develop-eggs/

|

||||

dist/

|

||||

downloads/

|

||||

eggs/

|

||||

.eggs/

|

||||

lib64/

|

||||

parts/

|

||||

sdist/

|

||||

var/

|

||||

wheels/

|

||||

share/python-wheels/

|

||||

*.egg-info/

|

||||

.installed.cfg

|

||||

*.egg

|

||||

MANIFEST

|

||||

|

||||

# PyInstaller

|

||||

# Usually these files are written by a python script from a template

|

||||

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

||||

*.manifest

|

||||

*.spec

|

||||

|

||||

# Installer logs

|

||||

pip-log.txt

|

||||

pip-delete-this-directory.txt

|

||||

|

||||

# Unit test / coverage reports

|

||||

htmlcov/

|

||||

.tox/

|

||||

.nox/

|

||||

.coverage

|

||||

.coverage.*

|

||||

.cache

|

||||

nosetests.xml

|

||||

coverage.xml

|

||||

*.cover

|

||||

*.py,cover

|

||||

.hypothesis/

|

||||

.pytest_cache/

|

||||

cover/

|

||||

|

||||

# Translations

|

||||

*.mo

|

||||

*.pot

|

||||

|

||||

# Django stuff:

|

||||

*.log

|

||||

local_settings.py

|

||||

db.sqlite3

|

||||

db.sqlite3-journal

|

||||

|

||||

# Flask stuff:

|

||||

instance/

|

||||

.webassets-cache

|

||||

|

||||

# Scrapy stuff:

|

||||

.scrapy

|

||||

|

||||

# Sphinx documentation

|

||||

docs/_build/

|

||||

|

||||

# PyBuilder

|

||||

.pybuilder/

|

||||

target/

|

||||

|

||||

# Jupyter Notebook

|

||||

.ipynb_checkpoints

|

||||

|

||||

# IPython

|

||||

profile_default/

|

||||

ipython_config.py

|

||||

|

||||

# pyenv

|

||||

# For a library or package, you might want to ignore these files since the code is

|

||||

# intended to run in multiple environments; otherwise, check them in:

|

||||

# .python-version

|

||||

|

||||

# pipenv

|

||||

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

||||

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

||||

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

||||

# install all needed dependencies.

|

||||

#Pipfile.lock

|

||||

|

||||

# poetry

|

||||

# Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

|

||||

# This is especially recommended for binary packages to ensure reproducibility, and is more

|

||||

# commonly ignored for libraries.

|

||||

# https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

|

||||

#poetry.lock

|

||||

|

||||

# pdm

|

||||

# Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

|

||||

#pdm.lock

|

||||

# pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

|

||||

# in version control.

|

||||

# https://pdm.fming.dev/#use-with-ide

|

||||

.pdm.toml

|

||||

|

||||

# PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

|

||||

__pypackages__/

|

||||

|

||||

# Celery stuff

|

||||

celerybeat-schedule

|

||||

celerybeat.pid

|

||||

|

||||

# SageMath parsed files

|

||||

*.sage.py

|

||||

|

||||

# Environments

|

||||

.env

|

||||

.venv

|

||||

env/

|

||||

venv/

|

||||

ENV/

|

||||

env.bak/

|

||||

venv.bak/

|

||||

|

||||

# Spyder project settings

|

||||

.spyderproject

|

||||

.spyproject

|

||||

|

||||

# Rope project settings

|

||||

.ropeproject

|

||||

|

||||

# mkdocs documentation

|

||||

/site

|

||||

|

||||

# mypy

|

||||

.mypy_cache/

|

||||

.dmypy.json

|

||||

dmypy.json

|

||||

|

||||

# Pyre type checker

|

||||

.pyre/

|

||||

|

||||

# pytype static type analyzer

|

||||

.pytype/

|

||||

|

||||

# Cython debug symbols

|

||||

cython_debug/

|

||||

|

||||

# PyCharm

|

||||

# JetBrains specific template is maintained in a separate JetBrains.gitignore that can

|

||||

# be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

|

||||

# and can be added to the global gitignore or merged into this file. For a more nuclear

|

||||

# option (not recommended) you can uncomment the following to ignore the entire idea folder.

|

||||

#.idea/

|

||||

|

||||

# Logs

|

||||

logs

|

||||

*.log

|

||||

npm-debug.log*

|

||||

yarn-debug.log*

|

||||

yarn-error.log*

|

||||

lerna-debug.log*

|

||||

.pnpm-debug.log*

|

||||

|

||||

# Diagnostic reports (https://nodejs.org/api/report.html)

|

||||

report.[0-9]*.[0-9]*.[0-9]*.[0-9]*.json

|

||||

|

||||

# Runtime data

|

||||

pids

|

||||

*.pid

|

||||

*.seed

|

||||

*.pid.lock

|

||||

|

||||

# Directory for instrumented libs generated by jscoverage/JSCover

|

||||

lib-cov

|

||||

|

||||

# Coverage directory used by tools like istanbul

|

||||

coverage

|

||||

*.lcov

|

||||

|

||||

# nyc test coverage

|

||||

.nyc_output

|

||||

|

||||

# Grunt intermediate storage (https://gruntjs.com/creating-plugins#storing-task-files)

|

||||

.grunt

|

||||

|

||||

# Bower dependency directory (https://bower.io/)

|

||||

bower_components

|

||||

|

||||

# node-waf configuration

|

||||

.lock-wscript

|

||||

|

||||

# Compiled binary addons (https://nodejs.org/api/addons.html)

|

||||

build/Release

|

||||

|

||||

# Dependency directories

|

||||

node_modules/

|

||||

jspm_packages/

|

||||

|

||||

# Snowpack dependency directory (https://snowpack.dev/)

|

||||

web_modules/

|

||||

|

||||

# TypeScript cache

|

||||

*.tsbuildinfo

|

||||

|

||||

# Optional npm cache directory

|

||||

.npm

|

||||

|

||||

# Optional eslint cache

|

||||

.eslintcache

|

||||

|

||||

# Optional stylelint cache

|

||||

.stylelintcache

|

||||

|

||||

# Microbundle cache

|

||||

.rpt2_cache/

|

||||

.rts2_cache_cjs/

|

||||

.rts2_cache_es/

|

||||

.rts2_cache_umd/

|

||||

|

||||

# Optional REPL history

|

||||

.node_repl_history

|

||||

|

||||

# Output of 'npm pack'

|

||||

*.tgz

|

||||

|

||||

# Yarn Integrity file

|

||||

.yarn-integrity

|

||||

|

||||

# dotenv environment variable files

|

||||

.env

|

||||

.env.development.local

|

||||

.env.test.local

|

||||

.env.production.local

|

||||

.env.local

|

||||

|

||||

# parcel-bundler cache (https://parceljs.org/)

|

||||

.cache

|

||||

.parcel-cache

|

||||

|

||||

# Next.js build output

|

||||

.next

|

||||

out

|

||||

|

||||

# Nuxt.js build / generate output

|

||||

.nuxt

|

||||

dist

|

||||

|

||||

# Gatsby files

|

||||

.cache/

|

||||

# Comment in the public line in if your project uses Gatsby and not Next.js

|

||||

# https://nextjs.org/blog/next-9-1#public-directory-support

|

||||

# public

|

||||

|

||||

# vuepress build output

|

||||

.vuepress/dist

|

||||

|

||||

# vuepress v2.x temp and cache directory

|

||||

.temp

|

||||

.cache

|

||||

|

||||

# Docusaurus cache and generated files

|

||||

.docusaurus

|

||||

|

||||

# Serverless directories

|

||||

.serverless/

|

||||

|

||||

# FuseBox cache

|

||||

.fusebox/

|

||||

|

||||

# DynamoDB Local files

|

||||

.dynamodb/

|

||||

|

||||

# TernJS port file

|

||||

.tern-port

|

||||

|

||||

# Stores VSCode versions used for testing VSCode extensions

|

||||

.vscode-test

|

||||

|

||||

# yarn v2

|

||||

.yarn/cache

|

||||

.yarn/unplugged

|

||||

.yarn/build-state.yml

|

||||

.yarn/install-state.gz

|

||||

.pnp.*

|

||||

1

ollama-webui/ollama-webui-main/.npmrc

Normal file

1

ollama-webui/ollama-webui-main/.npmrc

Normal file

@@ -0,0 +1 @@

|

||||

engine-strict=true

|

||||

16

ollama-webui/ollama-webui-main/.prettierignore

Normal file

16

ollama-webui/ollama-webui-main/.prettierignore

Normal file

@@ -0,0 +1,16 @@

|

||||

.DS_Store

|

||||

node_modules

|

||||

/build

|

||||

/.svelte-kit

|

||||

/package

|

||||

.env

|

||||

.env.*

|

||||

!.env.example

|

||||

|

||||

# Ignore files for PNPM, NPM and YARN

|

||||

pnpm-lock.yaml

|

||||

package-lock.json

|

||||

yarn.lock

|

||||

|

||||

# Ignore kubernetes files

|

||||

kubernetes

|

||||

9

ollama-webui/ollama-webui-main/.prettierrc

Normal file

9

ollama-webui/ollama-webui-main/.prettierrc

Normal file

@@ -0,0 +1,9 @@

|

||||

{

|

||||

"useTabs": true,

|

||||

"singleQuote": true,

|

||||

"trailingComma": "none",

|

||||

"printWidth": 100,

|

||||

"plugins": ["prettier-plugin-svelte"],

|

||||

"pluginSearchDirs": ["."],

|

||||

"overrides": [{ "files": "*.svelte", "options": { "parser": "svelte" } }]

|

||||

}

|

||||

64

ollama-webui/ollama-webui-main/Caddyfile.localhost

Normal file

64

ollama-webui/ollama-webui-main/Caddyfile.localhost

Normal file

@@ -0,0 +1,64 @@

|

||||

# Run with

|

||||

# caddy run --envfile ./example.env --config ./Caddyfile.localhost

|

||||

#

|

||||

# This is configured for

|

||||

# - Automatic HTTPS (even for localhost)

|

||||

# - Reverse Proxying to Ollama API Base URL (http://localhost:11434/api)

|

||||

# - CORS

|

||||

# - HTTP Basic Auth API Tokens (uncomment basicauth section)

|

||||

|

||||

|

||||

# CORS Preflight (OPTIONS) + Request (GET, POST, PATCH, PUT, DELETE)

|

||||

(cors-api) {

|

||||

@match-cors-api-preflight method OPTIONS

|

||||

handle @match-cors-api-preflight {

|

||||

header {

|

||||

Access-Control-Allow-Origin "{http.request.header.origin}"

|

||||

Access-Control-Allow-Methods "GET, POST, PUT, PATCH, DELETE, OPTIONS"

|

||||

Access-Control-Allow-Headers "Origin, Accept, Authorization, Content-Type, X-Requested-With"

|

||||

Access-Control-Allow-Credentials "true"

|

||||

Access-Control-Max-Age "3600"

|

||||

defer

|

||||

}

|

||||

respond "" 204

|

||||

}

|

||||

|

||||

@match-cors-api-request {

|

||||

not {

|

||||

header Origin "{http.request.scheme}://{http.request.host}"

|

||||

}

|

||||

header Origin "{http.request.header.origin}"

|

||||

}

|

||||

handle @match-cors-api-request {

|

||||

header {

|

||||

Access-Control-Allow-Origin "{http.request.header.origin}"

|

||||

Access-Control-Allow-Methods "GET, POST, PUT, PATCH, DELETE, OPTIONS"

|

||||

Access-Control-Allow-Headers "Origin, Accept, Authorization, Content-Type, X-Requested-With"

|

||||

Access-Control-Allow-Credentials "true"

|

||||

Access-Control-Max-Age "3600"

|

||||

defer

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

# replace localhost with example.com or whatever

|

||||

localhost {

|

||||

## HTTP Basic Auth

|

||||

## (uncomment to enable)

|

||||

# basicauth {

|

||||

# # see .example.env for how to generate tokens

|

||||

# {env.OLLAMA_API_ID} {env.OLLAMA_API_TOKEN_DIGEST}

|

||||

# }

|

||||

|

||||

handle /api/* {

|

||||

# Comment to disable CORS

|

||||

import cors-api

|

||||

|

||||

reverse_proxy localhost:11434

|

||||

}

|

||||

|

||||

# Same-Origin Static Web Server

|

||||

file_server {

|

||||

root ./build/

|

||||

}

|

||||

}

|

||||

52

ollama-webui/ollama-webui-main/Dockerfile

Normal file

52

ollama-webui/ollama-webui-main/Dockerfile

Normal file

@@ -0,0 +1,52 @@

|

||||

# syntax=docker/dockerfile:1

|

||||

|

||||

FROM node:alpine as build

|

||||

|

||||

WORKDIR /app

|

||||

|

||||

# wget embedding model weight from alpine (does not exist from slim-buster)

|

||||

RUN wget "https://chroma-onnx-models.s3.amazonaws.com/all-MiniLM-L6-v2/onnx.tar.gz"

|

||||

|

||||

COPY package.json package-lock.json ./

|

||||

RUN npm ci

|

||||

|

||||

COPY . .

|

||||

RUN npm run build

|

||||

|

||||

|

||||

FROM python:3.11-slim-bookworm as base

|

||||

|

||||

ENV ENV=prod

|

||||

ENV PORT ""

|

||||

|

||||

ENV OLLAMA_API_BASE_URL "/ollama/api"

|

||||

|

||||

ENV OPENAI_API_BASE_URL ""

|

||||

ENV OPENAI_API_KEY ""

|

||||

|

||||

ENV WEBUI_JWT_SECRET_KEY "SECRET_KEY"

|

||||

|

||||

WORKDIR /app

|

||||

|

||||

# copy embedding weight from build

|

||||

RUN mkdir -p /root/.cache/chroma/onnx_models/all-MiniLM-L6-v2

|

||||

COPY --from=build /app/onnx.tar.gz /root/.cache/chroma/onnx_models/all-MiniLM-L6-v2

|

||||

|

||||

RUN cd /root/.cache/chroma/onnx_models/all-MiniLM-L6-v2 &&\

|

||||

tar -xzf onnx.tar.gz

|

||||

|

||||

# copy built frontend files

|

||||

COPY --from=build /app/build /app/build

|

||||

|

||||

WORKDIR /app/backend

|

||||

|

||||

COPY ./backend/requirements.txt ./requirements.txt

|

||||

|

||||

RUN pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cpu --no-cache-dir

|

||||

RUN pip3 install -r requirements.txt --no-cache-dir

|

||||

|

||||

# RUN python -c "from sentence_transformers import SentenceTransformer; model = SentenceTransformer('all-MiniLM-L6-v2')"

|

||||

|

||||

COPY ./backend .

|

||||

|

||||

CMD [ "sh", "start.sh"]

|

||||

35

ollama-webui/ollama-webui-main/INSTALLATION.md

Normal file

35

ollama-webui/ollama-webui-main/INSTALLATION.md

Normal file

@@ -0,0 +1,35 @@

|

||||

### Installing Both Ollama and Ollama Web UI Using Kustomize

|

||||

|

||||

For cpu-only pod

|

||||

|

||||

```bash

|

||||

kubectl apply -f ./kubernetes/manifest/base

|

||||

```

|

||||

|

||||

For gpu-enabled pod

|

||||

|

||||

```bash

|

||||

kubectl apply -k ./kubernetes/manifest

|

||||

```

|

||||

|

||||

### Installing Both Ollama and Ollama Web UI Using Helm

|

||||

|

||||

Package Helm file first

|

||||

|

||||

```bash

|

||||

helm package ./kubernetes/helm/

|

||||

```

|

||||

|

||||

For cpu-only pod

|

||||

|

||||

```bash

|

||||

helm install ollama-webui ./ollama-webui-*.tgz

|

||||

```

|

||||

|

||||

For gpu-enabled pod

|

||||

|

||||

```bash

|

||||

helm install ollama-webui ./ollama-webui-*.tgz --set ollama.resources.limits.nvidia.com/gpu="1"

|

||||

```

|

||||

|

||||

Check the `kubernetes/helm/values.yaml` file to know which parameters are available for customization

|

||||

21

ollama-webui/ollama-webui-main/LICENSE

Normal file

21

ollama-webui/ollama-webui-main/LICENSE

Normal file

@@ -0,0 +1,21 @@

|

||||

MIT License

|

||||

|

||||

Copyright (c) 2023 Timothy Jaeryang Baek

|

||||

|

||||

Permission is hereby granted, free of charge, to any person obtaining a copy

|

||||

of this software and associated documentation files (the "Software"), to deal

|

||||

in the Software without restriction, including without limitation the rights

|

||||

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

||||

copies of the Software, and to permit persons to whom the Software is

|

||||

furnished to do so, subject to the following conditions:

|

||||

|

||||

The above copyright notice and this permission notice shall be included in all

|

||||

copies or substantial portions of the Software.

|

||||

|

||||

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

||||

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

||||

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

||||

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

||||

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

||||

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

||||

SOFTWARE.

|

||||

298

ollama-webui/ollama-webui-main/README.md

Normal file

298

ollama-webui/ollama-webui-main/README.md

Normal file

@@ -0,0 +1,298 @@

|

||||

# Ollama Web UI: A User-Friendly Web Interface for Chat Interactions 👋

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

[](https://discord.gg/5rJgQTnV4s)

|

||||

[](https://github.com/sponsors/tjbck)

|

||||

|

||||

ChatGPT-Style Web Interface for Ollama 🦙

|

||||

|

||||

**Disclaimer:** _ollama-webui is a community-driven project and is not affiliated with the Ollama team in any way. This initiative is independent, and any inquiries or feedback should be directed to [our community on Discord](https://discord.gg/5rJgQTnV4s). We kindly request users to refrain from contacting or harassing the Ollama team regarding this project._

|

||||

|

||||

|

||||

|

||||

Also check our sibling project, [OllamaHub](https://ollamahub.com/), where you can discover, download, and explore customized Modelfiles for Ollama! 🦙🔍

|

||||

|

||||

## Features ⭐

|

||||

|

||||

- 🖥️ **Intuitive Interface**: Our chat interface takes inspiration from ChatGPT, ensuring a user-friendly experience.

|

||||

|

||||

- 📱 **Responsive Design**: Enjoy a seamless experience on both desktop and mobile devices.

|

||||

|

||||

- ⚡ **Swift Responsiveness**: Enjoy fast and responsive performance.

|

||||

|

||||

- 🚀 **Effortless Setup**: Install seamlessly using Docker or Kubernetes (kubectl, kustomize or helm) for a hassle-free experience.

|

||||

|

||||

- 💻 **Code Syntax Highlighting**: Enjoy enhanced code readability with our syntax highlighting feature.

|

||||

|

||||

- ✒️🔢 **Full Markdown and LaTeX Support**: Elevate your LLM experience with comprehensive Markdown and LaTeX capabilities for enriched interaction.

|

||||

|

||||

- 📚 **Local RAG Integration**: Dive into the future of chat interactions with the groundbreaking Retrieval Augmented Generation (RAG) support. This feature seamlessly integrates document interactions into your chat experience. You can load documents directly into the chat or add files to your document library, effortlessly accessing them using `#` command in the prompt. In its alpha phase, occasional issues may arise as we actively refine and enhance this feature to ensure optimal performance and reliability.

|

||||

|

||||

- 📜 **Prompt Preset Support**: Instantly access preset prompts using the `/` command in the chat input. Load predefined conversation starters effortlessly and expedite your interactions. Effortlessly import prompts through [OllamaHub](https://ollamahub.com/) integration.

|

||||

|

||||

- 👍👎 **RLHF Annotation**: Empower your messages by rating them with thumbs up and thumbs down, facilitating the creation of datasets for Reinforcement Learning from Human Feedback (RLHF). Utilize your messages to train or fine-tune models, all while ensuring the confidentiality of locally saved data.

|

||||

|

||||

- 📥🗑️ **Download/Delete Models**: Easily download or remove models directly from the web UI.

|

||||

|

||||

- ⬆️ **GGUF File Model Creation**: Effortlessly create Ollama models by uploading GGUF files directly from the web UI. Streamlined process with options to upload from your machine or download GGUF files from Hugging Face.

|

||||

|

||||

- 🤖 **Multiple Model Support**: Seamlessly switch between different chat models for diverse interactions.

|

||||

|

||||

- 🔄 **Multi-Modal Support**: Seamlessly engage with models that support multimodal interactions, including images (e.g., LLava).

|

||||

|

||||

- 🧩 **Modelfile Builder**: Easily create Ollama modelfiles via the web UI. Create and add characters/agents, customize chat elements, and import modelfiles effortlessly through [OllamaHub](https://ollamahub.com/) integration.

|

||||

|

||||

- ⚙️ **Many Models Conversations**: Effortlessly engage with various models simultaneously, harnessing their unique strengths for optimal responses. Enhance your experience by leveraging a diverse set of models in parallel.

|

||||

|

||||

- 💬 **Collaborative Chat**: Harness the collective intelligence of multiple models by seamlessly orchestrating group conversations. Use the `@` command to specify the model, enabling dynamic and diverse dialogues within your chat interface. Immerse yourself in the collective intelligence woven into your chat environment.

|

||||

|

||||

- 🤝 **OpenAI API Integration**: Effortlessly integrate OpenAI-compatible API for versatile conversations alongside Ollama models. Customize the API Base URL to link with **LMStudio, Mistral, OpenRouter, and more**.

|

||||

|

||||

- 🔄 **Regeneration History Access**: Easily revisit and explore your entire regeneration history.

|

||||

|

||||

- 📜 **Chat History**: Effortlessly access and manage your conversation history.

|

||||

|

||||

- 📤📥 **Import/Export Chat History**: Seamlessly move your chat data in and out of the platform.

|

||||

|

||||

- 🗣️ **Voice Input Support**: Engage with your model through voice interactions; enjoy the convenience of talking to your model directly. Additionally, explore the option for sending voice input automatically after 3 seconds of silence for a streamlined experience.

|

||||

|

||||

- ⚙️ **Fine-Tuned Control with Advanced Parameters**: Gain a deeper level of control by adjusting parameters such as temperature and defining your system prompts to tailor the conversation to your specific preferences and needs.

|

||||

|

||||

- 🔗 **External Ollama Server Connection**: Seamlessly link to an external Ollama server hosted on a different address by configuring the environment variable.

|

||||

|

||||

- 🔐 **Role-Based Access Control (RBAC)**: Ensure secure access with restricted permissions; only authorized individuals can access your Ollama, and exclusive model creation/pulling rights are reserved for administrators.

|

||||

|

||||

- 🔒 **Backend Reverse Proxy Support**: Bolster security through direct communication between Ollama Web UI backend and Ollama. This key feature eliminates the need to expose Ollama over LAN. Requests made to the '/ollama/api' route from the web UI are seamlessly redirected to Ollama from the backend, enhancing overall system security.

|

||||

|

||||

- 🌟 **Continuous Updates**: We are committed to improving Ollama Web UI with regular updates and new features.

|

||||

|

||||

## 🔗 Also Check Out OllamaHub!

|

||||

|

||||

Don't forget to explore our sibling project, [OllamaHub](https://ollamahub.com/), where you can discover, download, and explore customized Modelfiles. OllamaHub offers a wide range of exciting possibilities for enhancing your chat interactions with Ollama! 🚀

|

||||

|

||||

## How to Install 🚀

|

||||

|

||||

🌟 **Important Note on User Roles and Privacy:**

|

||||

|

||||

- **Admin Creation:** The very first account to sign up on the Ollama Web UI will be granted **Administrator privileges**. This account will have comprehensive control over the platform, including user management and system settings.

|

||||

|

||||

- **User Registrations:** All subsequent users signing up will initially have their accounts set to **Pending** status by default. These accounts will require approval from the Administrator to gain access to the platform functionalities.

|

||||

|

||||

- **Privacy and Data Security:** We prioritize your privacy and data security above all. Please be reassured that all data entered into the Ollama Web UI is stored locally on your device. Our system is designed to be privacy-first, ensuring that no external requests are made, and your data does not leave your local environment. We are committed to maintaining the highest standards of data privacy and security, ensuring that your information remains confidential and under your control.

|

||||

|

||||

### Steps to Install Ollama Web UI

|

||||

|

||||

#### Before You Begin

|

||||

|

||||

1. **Installing Docker:**

|

||||

|

||||

- **For Windows and Mac Users:**

|

||||

|

||||

- Download Docker Desktop from [Docker's official website](https://www.docker.com/products/docker-desktop).

|

||||

- Follow the installation instructions provided on the website. After installation, open Docker Desktop to ensure it's running properly.

|

||||

|

||||

- **For Ubuntu and Other Linux Users:**

|

||||

- Open your terminal.

|

||||

- Set up your Docker apt repository according to the [Docker documentation](https://docs.docker.com/engine/install/ubuntu/#install-using-the-repository)

|

||||

- Update your package index:

|

||||

```bash

|

||||

sudo apt-get update

|

||||

```

|

||||

- Install Docker using the following command:

|

||||

```bash

|

||||

sudo apt-get install docker-ce docker-ce-cli containerd.io

|

||||

```

|

||||

- Verify the Docker installation with:

|

||||

```bash

|

||||

sudo docker run hello-world

|

||||

```

|

||||

This command downloads a test image and runs it in a container, which prints an informational message.

|

||||

|

||||

2. **Ensure You Have the Latest Version of Ollama:**

|

||||

|

||||

- Download the latest version from [https://ollama.ai/](https://ollama.ai/).

|

||||

|

||||

3. **Verify Ollama Installation:**

|

||||

- After installing Ollama, check if it's working by visiting [http://127.0.0.1:11434/](http://127.0.0.1:11434/) in your web browser. Remember, the port number might be different for you.

|

||||

|

||||

#### Installing with Docker 🐳

|

||||

|

||||

- **Important:** When using Docker to install Ollama Web UI, make sure to include the `-v ollama-webui:/app/backend/data` in your Docker command. This step is crucial as it ensures your database is properly mounted and prevents any loss of data.

|

||||

|

||||

- **If Ollama is on your computer**, use this command:

|

||||

|

||||

```bash

|

||||

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v ollama-webui:/app/backend/data --name ollama-webui --restart always ghcr.io/ollama-webui/ollama-webui:main

|

||||

```

|

||||

|

||||

- **To build the container yourself**, follow these steps:

|

||||

|

||||

```bash

|

||||

docker build -t ollama-webui .

|

||||

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v ollama-webui:/app/backend/data --name ollama-webui --restart always ollama-webui

|

||||

```

|

||||

|

||||

- After installation, you can access Ollama Web UI at [http://localhost:3000](http://localhost:3000).

|

||||

|

||||

#### Using Ollama on a Different Server

|

||||

|

||||

- To connect to Ollama on another server, change the `OLLAMA_API_BASE_URL` to the server's URL:

|

||||

|

||||

```bash

|

||||

docker run -d -p 3000:8080 -e OLLAMA_API_BASE_URL=https://example.com/api -v ollama-webui:/app/backend/data --name ollama-webui --restart always ghcr.io/ollama-webui/ollama-webui:main

|

||||

```

|

||||

|

||||

Or for a self-built container:

|

||||

|

||||

```bash

|

||||

docker build -t ollama-webui .

|

||||

docker run -d -p 3000:8080 -e OLLAMA_API_BASE_URL=https://example.com/api -v ollama-webui:/app/backend/data --name ollama-webui --restart always ollama-webui

|

||||

```

|

||||

|

||||

### Installing Ollama and Ollama Web UI Together

|

||||

|

||||

#### Using Docker Compose

|

||||

|

||||

- If you don't have Ollama yet, use Docker Compose for easy installation. Run this command:

|

||||

|

||||

```bash

|

||||

docker compose up -d --build

|

||||

```

|

||||

|

||||

- **For GPU Support:** Use an additional Docker Compose file:

|

||||

|

||||

```bash

|

||||

docker compose -f docker-compose.yaml -f docker-compose.gpu.yaml up -d --build

|

||||

```

|

||||

|

||||

- **To Expose Ollama API:** Use another Docker Compose file:

|

||||

|

||||

```bash

|

||||

docker compose -f docker-compose.yaml -f docker-compose.api.yaml up -d --build

|

||||

```

|

||||

|

||||

#### Using `run-compose.sh` Script (Linux or Docker-Enabled WSL2 on Windows)

|

||||

|

||||

- Give execute permission to the script:

|

||||

|

||||

```bash

|

||||

chmod +x run-compose.sh

|

||||

```

|

||||

|

||||

- For CPU-only container:

|

||||

|

||||

```bash

|

||||

./run-compose.sh

|

||||

```

|

||||

|

||||

- For GPU support (read the note about GPU compatibility):

|

||||

|

||||

```bash

|

||||

./run-compose.sh --enable-gpu

|

||||

```

|

||||

|

||||

- To build the latest local version, add `--build`:

|

||||

|

||||

```bash

|

||||

./run-compose.sh --enable-gpu --build

|

||||

```

|

||||

|

||||

### Alternative Installation Methods

|

||||

|

||||

For other ways to install, like using Kustomize or Helm, check out [INSTALLATION.md](/INSTALLATION.md). Join our [Ollama Web UI Discord community](https://discord.gg/5rJgQTnV4s) for more help and information.

|

||||

|

||||

## How to Install Without Docker

|

||||

|

||||

While we strongly recommend using our convenient Docker container installation for optimal support, we understand that some situations may require a non-Docker setup, especially for development purposes. Please note that non-Docker installations are not officially supported, and you might need to troubleshoot on your own.

|

||||

|

||||

### Project Components

|

||||

|

||||

The Ollama Web UI consists of two primary components: the frontend and the backend (which serves as a reverse proxy, handling static frontend files, and additional features). Both need to be running concurrently for the development environment.

|

||||

|

||||

> [!IMPORTANT]

|

||||

> The backend is required for proper functionality

|

||||

|

||||

### Requirements 📦

|

||||

|

||||

- 🐰 [Bun](https://bun.sh) >= 1.0.21 or 🐢 [Node.js](https://nodejs.org/en) >= 20.10

|

||||

- 🐍 [Python](https://python.org) >= 3.11

|

||||

|

||||

### Build and Install 🛠️

|

||||

|

||||

Run the following commands to install:

|

||||

|

||||

```sh

|

||||

git clone https://github.com/ollama-webui/ollama-webui.git

|

||||

cd ollama-webui/

|

||||

|

||||

# Copying required .env file

|

||||

cp -RPp example.env .env

|

||||

|

||||

# Building Frontend Using Node

|

||||

npm i

|

||||

npm run build

|

||||

|

||||

# or Building Frontend Using Bun

|

||||

# bun install

|

||||

# bun run build

|

||||

|

||||

# Serving Frontend with the Backend

|

||||

cd ./backend

|

||||

pip install -r requirements.txt -U

|

||||

sh start.sh

|

||||

```

|

||||

|

||||

You should have the Ollama Web UI up and running at http://localhost:8080/. Enjoy! 😄

|

||||

|

||||

## Troubleshooting

|

||||

|

||||

See [TROUBLESHOOTING.md](/TROUBLESHOOTING.md) for information on how to troubleshoot and/or join our [Ollama Web UI Discord community](https://discord.gg/5rJgQTnV4s).

|

||||

|

||||

## What's Next? 🚀

|

||||

|

||||

### Roadmap 📝

|

||||

|

||||

Here are some exciting tasks on our roadmap:

|

||||

|

||||

- 🌐 **Web Browsing Capability**: Experience the convenience of seamlessly integrating web content directly into your chat. Easily browse and share information without leaving the conversation.

|

||||

- 🔄 **Function Calling**: Empower your interactions by running code directly within the chat. Execute functions and commands effortlessly, enhancing the functionality of your conversations.

|

||||

- ⚙️ **Custom Python Backend Actions**: Empower your Ollama Web UI by creating or downloading custom Python backend actions. Unleash the full potential of your web interface with tailored actions that suit your specific needs, enhancing functionality and versatility.

|

||||

- 🧠 **Long-Term Memory**: Witness the power of persistent memory in our agents. Enjoy conversations that feel continuous as agents remember and reference past interactions, creating a more cohesive and personalized user experience.

|

||||

- 🧪 **Research-Centric Features**: Empower researchers in the fields of LLM and HCI with a comprehensive web UI for conducting user studies. Stay tuned for ongoing feature enhancements (e.g., surveys, analytics, and participant tracking) to facilitate their research.

|

||||

- 📈 **User Study Tools**: Providing specialized tools, like heat maps and behavior tracking modules, to empower researchers in capturing and analyzing user behavior patterns with precision and accuracy.

|

||||

- 📚 **Enhanced Documentation**: Elevate your setup and customization experience with improved, comprehensive documentation.

|

||||

|

||||

Feel free to contribute and help us make Ollama Web UI even better! 🙌

|

||||

|

||||

## Supporters ✨

|

||||

|

||||

A big shoutout to our amazing supporters who's helping to make this project possible! 🙏

|

||||

|

||||

### Platinum Sponsors 🤍

|

||||

|

||||

- We're looking for Sponsors!

|

||||

|

||||

### Acknowledgments

|

||||

|

||||

Special thanks to [Prof. Lawrence Kim @ SFU](https://www.lhkim.com/) and [Prof. Nick Vincent @ SFU](https://www.nickmvincent.com/) for their invaluable support and guidance in shaping this project into a research endeavor. Grateful for your mentorship throughout the journey! 🙌

|

||||

|

||||

## License 📜

|

||||

|

||||

This project is licensed under the [MIT License](LICENSE) - see the [LICENSE](LICENSE) file for details. 📄

|

||||

|

||||

## Support 💬

|

||||

|

||||

If you have any questions, suggestions, or need assistance, please open an issue or join our

|

||||

[Ollama Web UI Discord community](https://discord.gg/5rJgQTnV4s) or

|

||||

[Ollama Discord community](https://discord.gg/ollama) to connect with us! 🤝

|

||||

|

||||

---

|

||||

|

||||

Created by [Timothy J. Baek](https://github.com/tjbck) - Let's make Ollama Web UI even more amazing together! 💪

|

||||

32

ollama-webui/ollama-webui-main/TROUBLESHOOTING.md

Normal file

32

ollama-webui/ollama-webui-main/TROUBLESHOOTING.md

Normal file

@@ -0,0 +1,32 @@

|

||||

# Ollama Web UI Troubleshooting Guide

|

||||

|

||||

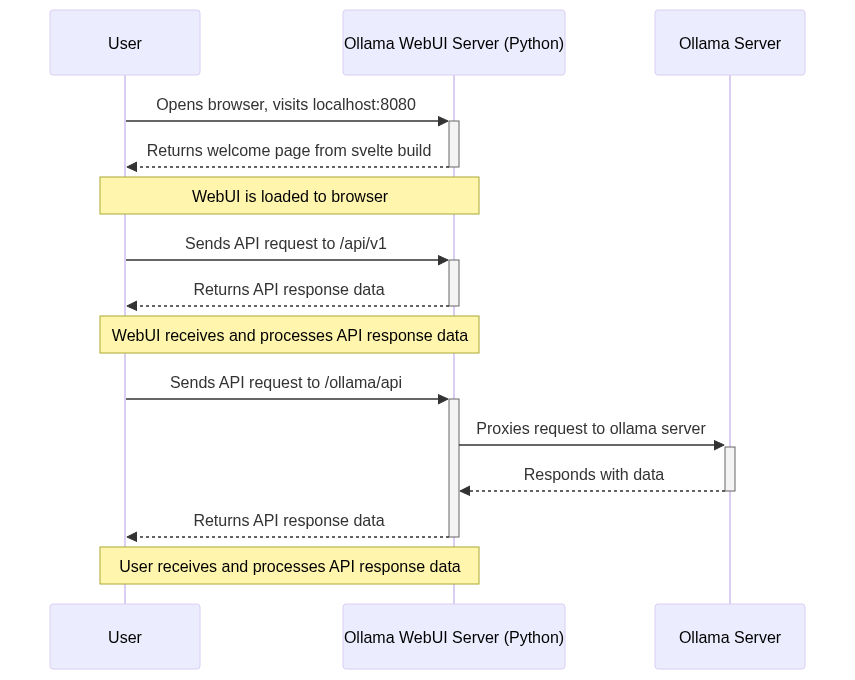

## Understanding the Ollama WebUI Architecture

|

||||

|

||||

The Ollama WebUI system is designed to streamline interactions between the client (your browser) and the Ollama API. At the heart of this design is a backend reverse proxy, enhancing security and resolving CORS issues.

|

||||

|

||||

- **How it Works**: The Ollama WebUI is designed to interact with the Ollama API through a specific route. When a request is made from the WebUI to Ollama, it is not directly sent to the Ollama API. Initially, the request is sent to the Ollama WebUI backend via `/ollama/api` route. From there, the backend is responsible for forwarding the request to the Ollama API. This forwarding is accomplished by using the route specified in the `OLLAMA_API_BASE_URL` environment variable. Therefore, a request made to `/ollama/api` in the WebUI is effectively the same as making a request to `OLLAMA_API_BASE_URL` in the backend. For instance, a request to `/ollama/api/tags` in the WebUI is equivalent to `OLLAMA_API_BASE_URL/tags` in the backend.

|

||||

|

||||

- **Security Benefits**: This design prevents direct exposure of the Ollama API to the frontend, safeguarding against potential CORS (Cross-Origin Resource Sharing) issues and unauthorized access. Requiring authentication to access the Ollama API further enhances this security layer.

|

||||

|

||||

## Ollama WebUI: Server Connection Error

|

||||

|

||||

If you're experiencing connection issues, it’s often due to the WebUI docker container not being able to reach the Ollama server at 127.0.0.1:11434 (host.docker.internal:11434) inside the container . Use the `--network=host` flag in your docker command to resolve this. Note that the port changes from 3000 to 8080, resulting in the link: `http://localhost:8080`.

|

||||

|

||||

**Example Docker Command**:

|

||||

|

||||

```bash

|

||||

docker run -d --network=host -v ollama-webui:/app/backend/data -e OLLAMA_API_BASE_URL=http://127.0.0.1:11434/api --name ollama-webui --restart always ghcr.io/ollama-webui/ollama-webui:main

|

||||

```

|

||||

|

||||

### General Connection Errors

|

||||

|

||||

**Ensure Ollama Version is Up-to-Date**: Always start by checking that you have the latest version of Ollama. Visit [Ollama's official site](https://ollama.ai/) for the latest updates.

|

||||

|

||||

**Troubleshooting Steps**:

|

||||

|

||||

1. **Verify Ollama URL Format**:

|

||||

- When running the Web UI container, ensure the `OLLAMA_API_BASE_URL` is correctly set, including the `/api` suffix. (e.g., `http://192.168.1.1:11434/api` for different host setups).

|

||||

- In the Ollama WebUI, navigate to "Settings" > "General".

|

||||

- Confirm that the Ollama Server URL is correctly set to `[OLLAMA URL]/api` (e.g., `http://localhost:11434/api`), including the `/api` suffix.

|

||||

|

||||

By following these enhanced troubleshooting steps, connection issues should be effectively resolved. For further assistance or queries, feel free to reach out to us on our community Discord.

|

||||

7

ollama-webui/ollama-webui-main/backend/.dockerignore

Normal file

7

ollama-webui/ollama-webui-main/backend/.dockerignore

Normal file

@@ -0,0 +1,7 @@

|

||||

__pycache__

|

||||

.env

|

||||

_old

|

||||

uploads

|

||||

.ipynb_checkpoints

|

||||

*.db

|

||||

_test

|

||||

9

ollama-webui/ollama-webui-main/backend/.gitignore

vendored

Normal file

9

ollama-webui/ollama-webui-main/backend/.gitignore

vendored

Normal file

@@ -0,0 +1,9 @@

|

||||

__pycache__

|

||||

.env

|

||||

_old

|

||||

uploads

|

||||

.ipynb_checkpoints

|

||||

*.db

|

||||

_test

|

||||

Pipfile

|

||||

data/*

|

||||

111

ollama-webui/ollama-webui-main/backend/apps/ollama/main.py

Normal file

111

ollama-webui/ollama-webui-main/backend/apps/ollama/main.py

Normal file

@@ -0,0 +1,111 @@

|

||||

from fastapi import FastAPI, Request, Response, HTTPException, Depends

|

||||

from fastapi.middleware.cors import CORSMiddleware

|

||||

from fastapi.responses import StreamingResponse

|

||||

from fastapi.concurrency import run_in_threadpool

|

||||

|

||||

import requests

|

||||

import json

|

||||

from pydantic import BaseModel

|

||||

|

||||

from apps.web.models.users import Users

|

||||

from constants import ERROR_MESSAGES

|

||||

from utils.utils import decode_token, get_current_user

|

||||

from config import OLLAMA_API_BASE_URL, WEBUI_AUTH

|

||||

|

||||

app = FastAPI()

|

||||

app.add_middleware(

|

||||

CORSMiddleware,

|

||||

allow_origins=["*"],

|

||||

allow_credentials=True,

|

||||

allow_methods=["*"],

|

||||

allow_headers=["*"],

|

||||

)

|

||||

|

||||

app.state.OLLAMA_API_BASE_URL = OLLAMA_API_BASE_URL

|

||||

|

||||

# TARGET_SERVER_URL = OLLAMA_API_BASE_URL

|

||||

|

||||

|

||||

@app.get("/url")

|

||||

async def get_ollama_api_url(user=Depends(get_current_user)):

|

||||

if user and user.role == "admin":

|

||||

return {"OLLAMA_API_BASE_URL": app.state.OLLAMA_API_BASE_URL}

|

||||

else:

|

||||

raise HTTPException(status_code=401, detail=ERROR_MESSAGES.ACCESS_PROHIBITED)

|

||||

|

||||

|

||||

class UrlUpdateForm(BaseModel):

|

||||

url: str

|

||||

|

||||

|

||||

@app.post("/url/update")

|

||||

async def update_ollama_api_url(

|

||||

form_data: UrlUpdateForm, user=Depends(get_current_user)

|

||||

):

|

||||

if user and user.role == "admin":

|

||||

app.state.OLLAMA_API_BASE_URL = form_data.url

|

||||

return {"OLLAMA_API_BASE_URL": app.state.OLLAMA_API_BASE_URL}

|

||||

else:

|

||||

raise HTTPException(status_code=401, detail=ERROR_MESSAGES.ACCESS_PROHIBITED)

|

||||

|

||||

|

||||

@app.api_route("/{path:path}", methods=["GET", "POST", "PUT", "DELETE"])

|

||||

async def proxy(path: str, request: Request, user=Depends(get_current_user)):

|

||||

target_url = f"{app.state.OLLAMA_API_BASE_URL}/{path}"

|

||||

|

||||

body = await request.body()

|

||||

headers = dict(request.headers)

|

||||

|

||||

if user.role in ["user", "admin"]:

|

||||

if path in ["pull", "delete", "push", "copy", "create"]:

|

||||

if user.role != "admin":

|

||||

raise HTTPException(

|

||||

status_code=401, detail=ERROR_MESSAGES.ACCESS_PROHIBITED

|

||||

)

|

||||

else:

|

||||

raise HTTPException(status_code=401, detail=ERROR_MESSAGES.ACCESS_PROHIBITED)

|

||||

|

||||

headers.pop("host", None)

|

||||

headers.pop("authorization", None)

|

||||

headers.pop("origin", None)

|

||||

headers.pop("referer", None)

|

||||

|

||||

r = None

|

||||

|

||||

def get_request():

|

||||

nonlocal r

|

||||

try:

|

||||

r = requests.request(

|

||||

method=request.method,

|

||||

url=target_url,

|

||||

data=body,

|

||||

headers=headers,

|

||||

stream=True,

|

||||

)

|

||||

|

||||

r.raise_for_status()

|

||||

|

||||

return StreamingResponse(

|

||||

r.iter_content(chunk_size=8192),

|

||||

status_code=r.status_code,

|

||||

headers=dict(r.headers),

|

||||

)

|

||||

except Exception as e:

|

||||

raise e

|

||||

|

||||

try:

|

||||

return await run_in_threadpool(get_request)

|

||||

except Exception as e:

|

||||

error_detail = "Ollama WebUI: Server Connection Error"

|

||||

if r is not None:

|

||||

try:

|

||||

res = r.json()

|

||||

if "error" in res:

|

||||

error_detail = f"Ollama: {res['error']}"

|

||||

except:

|

||||

error_detail = f"Ollama: {e}"

|

||||

|

||||

raise HTTPException(

|

||||

status_code=r.status_code if r else 500,

|

||||

detail=error_detail,

|

||||

)

|

||||

127

ollama-webui/ollama-webui-main/backend/apps/ollama/old_main.py

Normal file

127

ollama-webui/ollama-webui-main/backend/apps/ollama/old_main.py

Normal file

@@ -0,0 +1,127 @@

|

||||

from fastapi import FastAPI, Request, Response, HTTPException, Depends

|

||||

from fastapi.middleware.cors import CORSMiddleware

|

||||

from fastapi.responses import StreamingResponse

|

||||

|

||||

import requests

|

||||

import json

|

||||

from pydantic import BaseModel

|

||||

|

||||

from apps.web.models.users import Users

|

||||

from constants import ERROR_MESSAGES

|

||||

from utils.utils import decode_token, get_current_user

|

||||

from config import OLLAMA_API_BASE_URL, WEBUI_AUTH

|

||||

|

||||

import aiohttp

|

||||

|

||||

app = FastAPI()

|

||||

app.add_middleware(

|

||||

CORSMiddleware,

|

||||

allow_origins=["*"],

|

||||

allow_credentials=True,

|

||||

allow_methods=["*"],

|

||||

allow_headers=["*"],

|

||||

)

|

||||

|

||||

app.state.OLLAMA_API_BASE_URL = OLLAMA_API_BASE_URL

|

||||

|

||||

# TARGET_SERVER_URL = OLLAMA_API_BASE_URL

|

||||

|

||||

|

||||

@app.get("/url")

|

||||

async def get_ollama_api_url(user=Depends(get_current_user)):

|

||||

if user and user.role == "admin":

|

||||

return {"OLLAMA_API_BASE_URL": app.state.OLLAMA_API_BASE_URL}

|

||||

else:

|

||||

raise HTTPException(status_code=401, detail=ERROR_MESSAGES.ACCESS_PROHIBITED)

|

||||

|

||||

|

||||

class UrlUpdateForm(BaseModel):

|

||||

url: str

|

||||

|

||||

|

||||

@app.post("/url/update")

|

||||

async def update_ollama_api_url(

|

||||

form_data: UrlUpdateForm, user=Depends(get_current_user)

|

||||

):

|

||||

if user and user.role == "admin":

|

||||

app.state.OLLAMA_API_BASE_URL = form_data.url

|

||||

return {"OLLAMA_API_BASE_URL": app.state.OLLAMA_API_BASE_URL}

|

||||

else:

|

||||

raise HTTPException(status_code=401, detail=ERROR_MESSAGES.ACCESS_PROHIBITED)

|

||||

|

||||

|

||||

# async def fetch_sse(method, target_url, body, headers):

|

||||

# async with aiohttp.ClientSession() as session:

|

||||

# try:

|

||||

# async with session.request(

|

||||

# method, target_url, data=body, headers=headers

|

||||

# ) as response:

|

||||

# print(response.status)

|

||||

# async for line in response.content:

|

||||

# yield line

|

||||

# except Exception as e:

|

||||

# print(e)

|

||||

# error_detail = "Ollama WebUI: Server Connection Error"

|

||||